What one would like for practical purposes is that the simulations

are conditioned on knowledge that is already available. In

particular on information from well logs. Now as long as there is

one well this is easily done in the way described before:

simply use the information from the vertical5 well as initial states for the left hand column

of the simulation to be generated (The states in the top row are

obtainable too, as they describe the surface.) However, a

particulary interesting situation is one where there is

information from two wells. How is conditioning going to be

performed in this case? Already in the one-dimensional case such a

conditioning does not seem feasible at first sight: a Markov chain

evolves typically from past to future so how can you correctly

fill in the near future if you already know the far future?

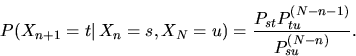

Actually it is mathematically very simple to accomplish this. Let

![]() be the chain of states at time

be the chain of states at time ![]() ,

and let

,

and let

![]() be the matrix of transition

probabilities, i.e., for all

be the matrix of transition

probabilities, i.e., for all ![]() and

and ![]()

This formula

yields a cheap way to generate Markov chain realisations

conditioned on the future. The two-dimensional case is much more

complicated, it is even not clear what ``future'' means in that

case. In the geological application it is however clear where one

wants to condition on: the left most column, and the right most

column which represent data from two wells. In our paper

an ``engineering'' solution has been chosen to the

conditioning problem: the horizontal chain is conditioned as

described above, and then this conditioned chain is coupled

to the vertical chain. For exact conditioning it is useful to note

(see also Galbraith and Walley) that a unilateral Markov random

field can also be described by a one-dimensional Markov chain in a

random environment (the random environment is generated by the

chain itself). In fact, define for ![]() the matrix

the matrix ![]() by

by

![\begin{eqnarray*}

&& \mathbb P(Z_{m+M,n}=t\vert\,Z_{m,n}=s, Z_{m+1,n-1}=r_1,\dot...

...{m+M,n-1}=r_M)\\ && = (P^{[r_1]}P^{[r_2]} \dots P^{[r_M]})_{st}.

\end{eqnarray*}](img174.png)

![\begin{eqnarray*}

&& \mathbb P(Z_{m+1,n}=t\vert\,Z_{mn}=s, Z_{m+1,n-1}=r_1, \dot...

...

P^{[r_{M-n+1}]})_{tu}}{(P^{[r_1]} \dots P^{[r_{M-n+1}]})_{su}}.

\end{eqnarray*}](img177.png)